生成型学习算法

1 生成型学习算法简述

我们之前讲到的算法。譬如逻辑回归,试图从\(x\)直接学习得到一组映射关系到\(y\),即通过样本\((x,y)\)学习得到,使得其能够准确的预测\(y\)。这类算法叫做判别性学习算法。

本节我们将介绍生成学习型算法。举个例子,对于一个大象,我们可以为大象构造一个关于其特征的模型。对于一条狗,同样可以为狗构造一个关于关于其特征的模型。对于一个新动物,我们可以根据前述构建的模型,判断这个动物是像大象多一点还是像狗多一点,来确定这动物是大象还是狗。

我们可以根据样本得到大象的特征模型\(p(x|y=0)\)和狗的特征模型\(p(x|y=1)\),同时也可以得到\(p(y)\)。根据贝叶斯公式,有如下:

$$\max (p(y|x)) = \max (\frac{p(x|y)p(y)}{p(x)}) = \max (\frac{p(x|y)p(y)}{p(x|y = 1)p(y = 1) + p(x|y = 0)p(y = 0)})$$对于我们做预测的时候,可以不考虑分母。

$$\max (p(y|x)) = \max (p(x|y)p(y))$$为什么不考虑分母?我们可以将\(p(x|y)p(y)\)简化为\(f(y)\),因此分母就是\(f(1)+f(0)\)。如果我们通过样本对完成了建模,因此分母就是一个常数了,因此没有计算的必要了。

2 高斯判别分析

2.1 多维高斯分布介绍

略,详见cs229-note2.pdf

2.2 高斯判别分析模型

对于一个分类问题,\(y \in (0,1)\)。假设\(x\)是连续的随机变量,服从高斯分布。具体描述如下:

$$y \sim Bernoulli(\phi)$$ $$x|y = 0 \sim N({\mu _0},\Sigma)$$ $$x|y = 1 \sim N({\mu _1},\Sigma)$$ $$p(x|y = 0) = \frac{1}{{{(2\pi )}^{\frac{n}{2}}}|\Sigma {|^{\frac{n}{2}}}}\exp ( - \frac{1}{2}{(x - {\mu _0})^T}{\Sigma ^{ - 1}}(x - {\mu _0}))$$ $$p(x|y = 1) = \frac{1}{{{(2\pi )}^{\frac{n}{2}}}|\Sigma {|^{\frac{n}{2}}}}\exp ( - \frac{1}{2}{(x - {\mu _1})^T}{\Sigma ^{ - 1}}(x - {\mu _1}))$$2.3 公式推导

我们设置\(\phi = p(y = 0)\)。根据前面的分析,在给定样本的情况下,我们需要保证下式最大。

$$\prod\limits _{i = 1}^m {p({y^i}|{x^i})} = \prod\limits _{i = 1}^m {p({x^i}|{y^i})p({y^i})}$$这里为了简写,我们设置\({z _0} = x - {\mu _0}\) ,\({z _1} = x - {\mu _1}\)。取自然对数后,得到最大释然函数:

$$\ell ({\mu _1},{\mu _1},\Sigma ,\phi ) = \sum\limits _{i = 1}^m {\ln \{ {{[\frac{1}{{{{(2\pi )}^{\frac{n}{2}}}|\Sigma {|^{\frac{n}{2}}}}}\exp ( - \frac{1}{2}z _0^T{\Sigma ^{ - 1}}{z _0})]}^{1\{ {y^i} = 0\} }}{{[\frac{1}{{{{(2\pi )}^{\frac{n}{2}}}|\Sigma {|^{\frac{n}{2}}}}}\exp ( - \frac{1}{2}z _1^T{\Sigma ^{ - 1}}{z _1})]}^{1\{ {y^i} = 1\} }}\} } $$ $$\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = \sum\limits _{i = 1}^m {\{ 1\{ {y^i} = 0\} [ - \frac{1}{2}z _0^T{\Sigma ^{ - 1}}{z _0} - \frac{n}{2}\ln 2\pi - \frac{n}{2}\ln |\Sigma | + \ln \phi ] + 1\{ {y^i} = 1\} } [ - \frac{1}{2}z _1^T{\Sigma ^{ - 1}}{z _1} - \frac{n}{2}\ln 2\pi - \frac{n}{2}\ln |\Sigma | + \ln (1 - \phi )]\}$$然后我们分别对各个参数求偏导数:

$$\frac{{\partial \ell ({\mu _0},{\mu _1},\sum ,\phi )}}{{\partial \phi }} = \sum\limits _{i = 1}^m {(\frac{{1\{ {y^i} = 1\} }}{\phi } - \frac{{1\{ {y^i} = 0\} }}{{1 - \phi }})} = \sum\limits _{i = 1}^n {(\frac{{1\{ {y^i} = 1\} }}{\phi } - \frac{{1 - 1\{ {y^i} = 1\} }}{{1 - \phi }})} = 0$$所以有:

$$\phi = \frac{1}{m}\sum\limits _{i = 1}^n {1\{ {y^i} = 1\} }$$然后对\(\mu _0\)求导:

$${\nabla _{{\mu _0}}}\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = \sum\limits _{i = 1}^m {{\nabla _{{\mu _0}}}( - \frac{1}{2}{{({x^i} - {\mu _0})}^T}{\Sigma ^{ - 1}}({x^i} - {\mu _0}))1\{ {y^i} = 0\} }$$ $${\nabla _{{\mu _0}}}\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = - \frac{1}{2}\sum\limits _{i = 1}^m {{\nabla _{{\mu _0}}}( - \mu _0^T{\Sigma ^{ - 1}}{x^i} - {{({x^i})}^T}{\Sigma ^{ - 1}}{\mu _0} + \mu _0^T{\Sigma ^{ - 1}}{\mu _0})1\{ {y^i} = 0\} }$$我们对上面的式子分头计算。

$${\nabla _{{\mu _0}}}\mu _0^T{\Sigma ^{ - 1}}x = {\nabla _{{\mu _0}}}tr(\mu _0^T{\Sigma ^{ - 1}}{x^i}) = {[{\nabla _{\mu _0^T}}tr(\mu _0^T{\Sigma ^{ - 1}}{x^i})]^T} = {[{({\Sigma ^{ - 1}}{x^i})^T}]^T} = {\Sigma ^{ - 1}}{x^i}$$ $${\nabla _{{\mu _0}}}{({x^i})^T}{\Sigma ^{ - 1}}{\mu _0} = {\nabla _{{\mu _0}}}tr({({x^i})^T}{\Sigma ^{ - 1}}{\mu _0}) = {\nabla _{{\mu _0}}}{\mu _0}{({x^i})^T}{\Sigma ^{ - 1}} = {({({x^i})^T}{\Sigma ^{ - 1}})^T} = {\Sigma ^{ - 1}}{x^i}$$ $${\nabla _{{\mu _0}}}\mu _0^T{\Sigma ^{ - 1}}{\mu _0} = {[{\nabla _{\mu _0^T}}\mu _0^T{\Sigma ^{ - 1}}{\mu _0}E]^T} = [E\mu _0^T{\Sigma ^{ - 1}} + {E^T}\mu _0^T{({\Sigma ^{ - 1}})^T}] = 2{\Sigma ^{ - 1}}{\mu _0}$$\(\Sigma\)为对角阵。由于求偏微分的数为一个常数,因此该值与其迹的相同。另外,这里使用了一些关于迹的公式。具体详见附录。

所以有:

$${\nabla _{{\mu _0}}}\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = - \sum\limits _{i = 1}^m {{\nabla _{{\mu _0}}}({\Sigma ^{ - 1}}{\mu _0} - {\Sigma ^{ - 1}}{x^i})1\{ {y^i} = 0\} } = 0$$ $${\mu _0} = \frac{{\sum\limits _{i = 1}^m {1\{ {y^i} = 0\} {x^i}} }}{{\sum\limits _{i = 1}^m {1\{ {y^i} = 0\} } }}$$同理有:

$${\mu _1} = \frac{{\sum\limits _{i = 1}^m {1\{ {y^i} = 1\} {x^i}} }}{{\sum\limits _{i = 1}^m {1\{ {y^i} = 1\} } }}$$然后对\(\Sigma^{-1}\)求偏导数。

$${\nabla _{{\Sigma ^{ - 1}}}}\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = \sum\limits _{i = 1}^m {{\nabla _{{\Sigma ^{ - 1}}}}[( - \frac{1}{2}z _0^T{\Sigma ^{ - 1}}{z _0} + \frac{1}{2}\ln \frac{1}{{|\Sigma |}})1\{ {y^i} = 0\} + ( - \frac{1}{2}z _1^T{\Sigma ^{ - 1}}{z _1} + \frac{1}{2}\ln \frac{1}{{|\Sigma |}})1\{ {y^i} = 1\} ]}$$这里分开计算:

$${\nabla _{\Sigma ^{ - 1}}}(z _0^T{\Sigma ^{ - 1}}{z _0}) = {\nabla _{\sum ^{ - 1}}}tr(z _0^T{\Sigma ^{ - 1}}{z _0}) = {\nabla _{\sum ^{ - 1}}}tr({\Sigma ^{ - 1}}{z _0}z _0^T) = {({z _0}z _0^T)^T} = {z _0}z _0^T$$ $${\nabla _{\Sigma ^{ - 1}}}\ln \frac{1}{|\Sigma |} = {\nabla _{\Sigma ^{ - 1}}}\ln |{\Sigma ^{ - 1}}| = \frac{1}{|{\Sigma ^{ - 1}}|}{\nabla _{\Sigma ^{ - 1}}}{\Sigma ^{ - 1}} = {({({\Sigma ^{ - 1}})^{ - 1}})^T}$$所以有:

$${\nabla _{{\sum ^{ - 1}}}}\ell ({\mu _0},{\mu _1},\Sigma ,\phi ) = \sum\limits _{i = 1}^m {[( - \frac{1}{2}{z _0}z _0^T + \frac{1}{2}\Sigma )1\{ {y^i} = 0\} + ( - \frac{1}{2}{z _1}z _1^T + \frac{1}{2}\Sigma )1\{ {y^i} = 1\} ]} = 0$$ $$\Sigma = \frac{1}{m}\sum\limits _{i = 1}^m {[({x^i} - {\mu _0}){{({x^i} - {\mu _0})}^T}1\{ {y^i} = 0\} + ({x^i} - {\mu _1}){{({x^i} - {\mu _1})}^T}1\{ {y^i} = 1\} ]}$$ $$\Sigma = \frac{1}{m}\sum\limits _{i = 1}^m {[({x^i} - {\mu _{{y^i}}}){{({x^i} - {\mu _{{y^i}}})}^T}]}$$2.3 高斯型算法实例

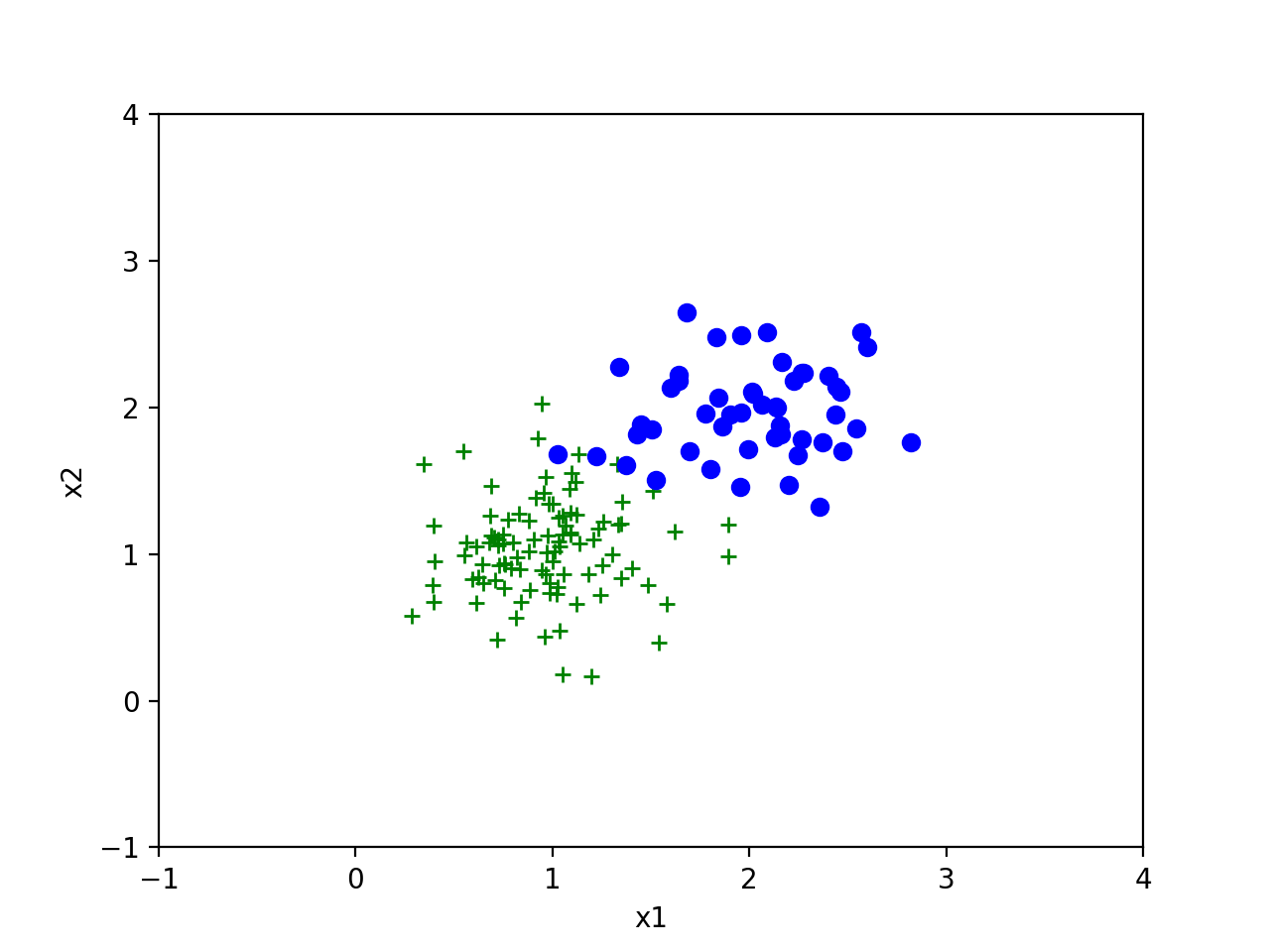

我们分别以 \((1,1)\)和\((2,2)\)为均值生成一组高斯分布。下面图是生成的样本。

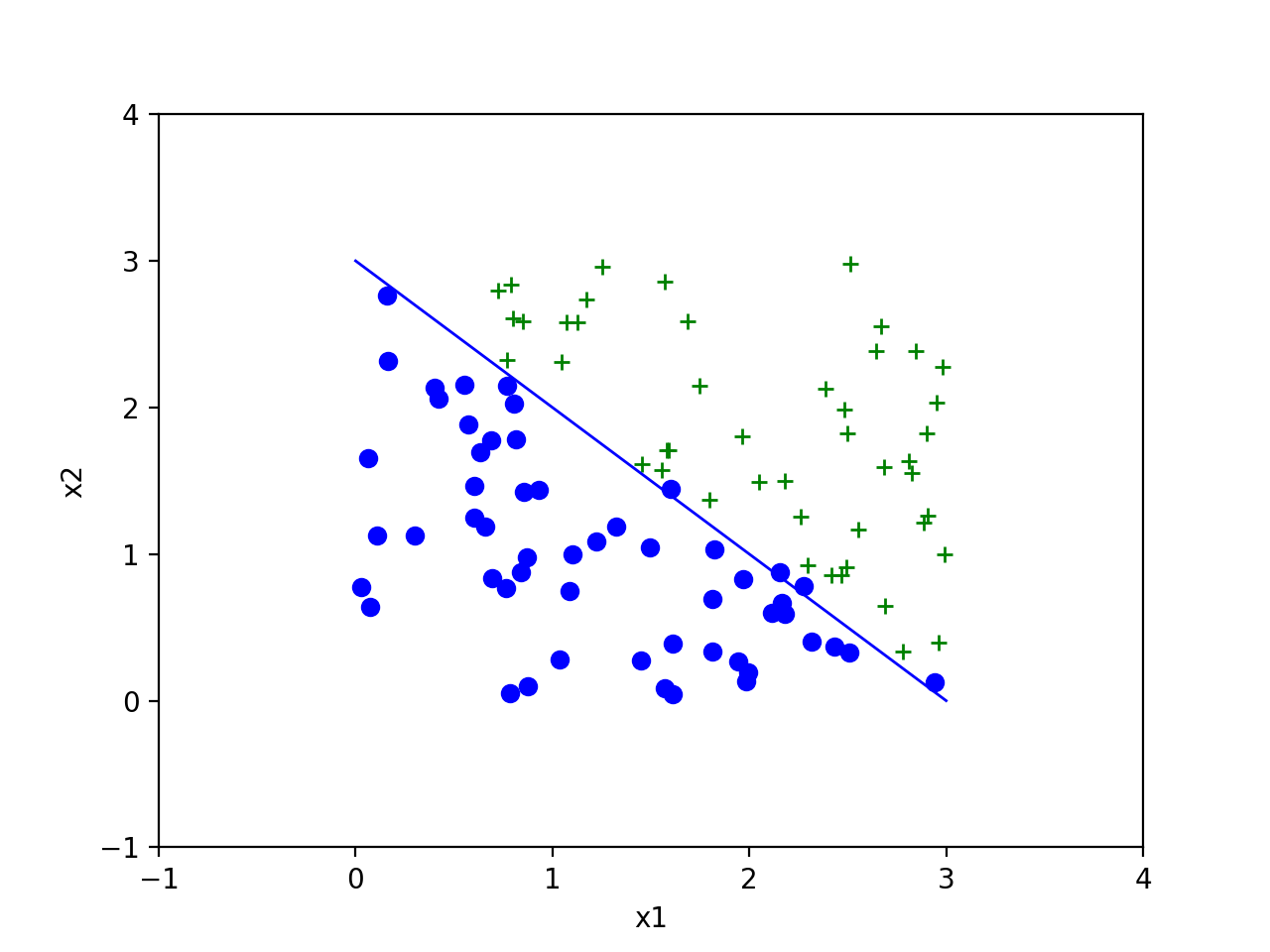

前提假设是我们知道两组分类是符合高斯分布的,切假定协方差相同。但我们不知道两组数据的均值和协方差。根据前面的公式有如下代码:

1 | import numpy as np |

对于两个协方差相同的样本,我们知道两组数据有相同的概率分布曲线,具体都应该是一个个同心圆。因此,我们可以确定一条垂直于两点连线的曲线是对该样本的理论上正确的分割。因此,我们得到如下结果,并与理论分割的曲线,即\(y=-x+3\)比较,发现对我们随机产生的样本分类效果非常好。

附录 A 相关公式

Hessian矩阵定义:

$${\nabla _A}f(A) = \left( {\begin{array}{*{20}{c}} {\frac{{\partial f}}{{\partial {A _{11}}}}}& \ldots &{\frac{{\partial f}}{{\partial {A _{1n}}}}} \\\ \vdots & \ddots & \vdots \\\ {\frac{{\partial f}}{{\partial {A _{n1}}}}}& \cdots &{\frac{{\partial f}}{{\partial {A _{nn}}}}} \end{array}} \right)$$矩阵的迹的定义:

$$trA = \sum\limits _{i = 1}^n {{A _{ii}}}$$关于矩阵迹的公式:

$$tr(a) = a$$ $$tr(aA) = atr(A)$$ $$tr(aA) = atr(A)$$ $$tr(ABC) = tr(CAB) + tr(BCA)$$ $$trA = tr{A^T}$$ $$tr(A + B) = trA + trB$$ $${\nabla _A}tr(AB) = {B^T}$$ $${\nabla _{A^T}}f(A) = {({\nabla _A}f(A))^T}$$ $${\nabla _{A^T}}trAB{A^T}C = CAB + {C^T}A{B^T}$$ $${\nabla _{A^T}}trAB{A^T}C = CAB + {C^T}A{B^T}$$对矩阵做展开能证明上述大部分公式,这里暂时略。

附录B

手写公式和word版博客地址:

1 | https://github.com/zhengchenyu/MyBlog/tree/master/doc/mlearning/生成型学习算法 |